- #Install apache spark ubuntu install#

- #Install apache spark ubuntu zip file#

- #Install apache spark ubuntu download#

#Install apache spark ubuntu install#

OpenJDK 64-Bit Server VM (build 25.212-b03, mixed mode) If you don’t, run the following command in the terminal: sudo apt install openjdk-8-jdkĪfter in stallation, if you type the java -version in the terminal you will get: openjdk version "1.8.0_212" If you follow the steps, you should be able to install PySpark without any problem. Remember the directory where you downloaded it. Then, in a new line after the PATH variable add JAVA_HOME="/usr/lib/jvm/java-8-openjdk-amd64" Set the $JAVA_HOME Environment Variableįor this, run the following in the terminal: sudo vim /etc/environment I got it in my default downloads folder where I will install spark. The output should be: /usr/lib/jvm/java-8-openjdk-amd64 Later, in the terminal run source /etc/environmentĭon’t forget to run the last line in the terminal, as that will create the environment variable and load it in the currently running shell. Now some versions of ubuntu do not run the /etc/environment file every time we open the terminal so it’s better to add it in the. bashrc file is loaded to the terminal every time it’s opened. So run the following command in the terminal, vim ~/.bashrcįile opens. We will add spark variables below it later.

bashrc file in the terminal again by running the following command. Or you can exit this terminal and create another. This method is best for WSL (Windows Subsystem for Linux) Ubuntu: By now, if you run echo $JAVA_HOME you should get the expected output.

#Install apache spark ubuntu zip file#

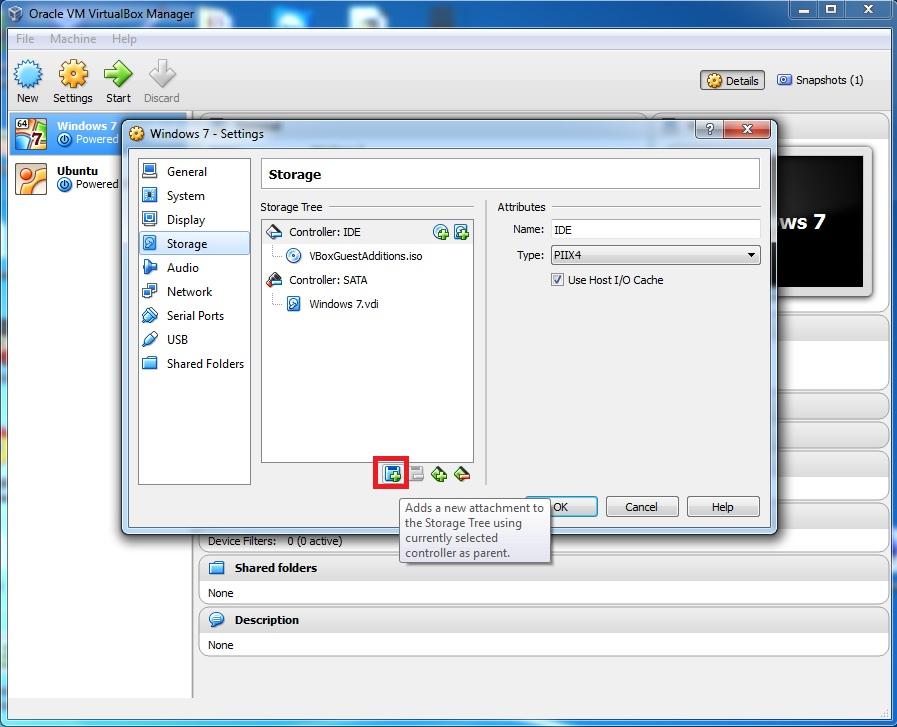

Go to the directory where the spark zip file was downloaded and run the command to install it: cd Downloads Just execute the below command if you have Python and PIP already installed. Sudo tar -zxvf spark-2.4.3-bin-hadoop2.7.tgz #INSTALL APACHE SPARK ON UBUNTU ZIP FILE# Platform (file:/opt/spark/jars/spark-unsafe_2.12-3.0.1.jar) to constructor (long,int) bashrc file again in the terminal by source ~/.bashrcįinally, if you execute the below command it will launch Spark Shell.WARNING: An illegal reflective access operation has occurred This step is only meant if you have installed in “Manual Way” vim ~/.bashrcĪdd the following at the end, export SPARK_HOME=~/Downloads/spark-2.4.3-bin-hadoop2.7Įxport PYTHONPATH=$SPARK_HOME/python:$PYTHONPATHĮxport PYSPARK_DRIVER_PYTHON_OPTS="notebook" Configure Environment Variables for Spark Note : If your spark file is of different version correct the name accordingly.

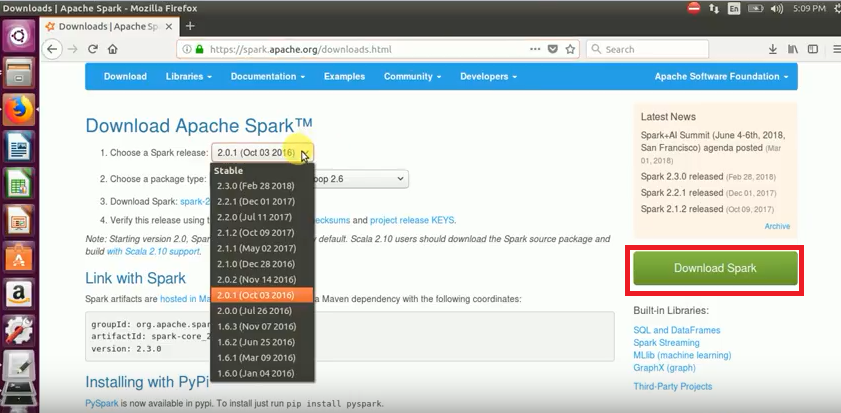

Platform WARNING: Illegal reflective access by. WARNING: Use -illegal-access=warn to enable warnings of further illegal reflective access operations WARNING: Please consider reporting this to the maintainers of. Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties using builtin-java classes where applicable WARNING: All illegal access operations will be denied in a future releaseĢ0/09/09 22:48:09 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. To adjust logging level use sc.setLogLevel(newLevel). In our case we are interested in installing Spark 2.0.0, So we are going to install Scala-2.11.7. Spark context available as 'sc' (master = local, app id = local-1599706095232). In one of our previous article, we have explained steps for Installing Scala on Ubuntu Linux.

#Install apache spark ubuntu download#

Step 3: Download Apache Spark: Download Spark 2.2.0-prebuilt for Hadoop 2.6 from Apache Spark website. Using Scala version 2.12.10 (OpenJDK 64-Bit Server VM, Java 11.0.8) #INSTALL APACHE SPARK ON UBUNTU DOWNLOAD# Type in expressions to have them evaluated.

0 kommentar(er)

0 kommentar(er)